AX in Practice: Real-World Examples from Netlify and the Industry

We are in a Gutenberg press moment for software development. Few realize the scope of change to our whole industry when not just millions, but billions can build custom software with the help of AI agents.

At the start of this year, I coined the term AX, agent experience as “the holistic experience AI agents will have as the user of a product or platform.” And Netlify made great AX the north-star of our product..

The question I’ve been getting the most is: “what does AX mean in practice?” What did you actually do at Netlify to build an AI first platform and shape what good AX looks like from day one? What are others in the industry doing?

Today I want to share a set of practical examples of real-world AX improvements that we’ve been shipping at Netlify and some I’ve been observing across the industry. I focus on developer and infrastructure since those are some of the first areas to fully realize the importance of building for agents and differentiating through AX. However, the same patterns will be relevant across the industry.

I’m convinced AX as a discipline will become as foundational to product design and development as UX has been since it first gained traction in the late 1990’s.

Onboarding: first agent, then human

An important set of emerging AX patterns is showing up in products that let an agent working on behalf of a human start interacting on its own, before the human user has signed up or even been introduced to the product.

Netlify pioneered the “deploy anonymously, then claim” flow to address this and let users create and deploy a new web project, before the user has interacted with Netlify at all. Only when the human wants to take ownership over the project does the agent have to share a signed link with them. Then it can claim the site or app and add it to their Netlify account, often creating their Netlify account in the process.

This is a flow the team has actively iterated on with users and agents, treating AX as a discipline. We’ve watched session recordings of users coming in from an agent and going through the claims and signup flow, running user interviews and even setting up our own internal code agents just to test the end-to-end flows and experiment with different approaches ourselves.

You’ll notice details like introducing the agent name (in this case, Bolt) directly in our UI to maintain the bi-directional link between site and agent, just like we handle the traditional link between GitHub and Netlify. We also introduced team selection in this flow, since sometimes you need to deploy your sites to a team you’re a member of versus your personal account. And because new users signing up through this flow come with different needs and expectations, we changed our entire onboarding experience, in-app and over email to better support them.

This wasn’t just a UX tweak, but a rethink of the entire onboarding assumption that made us the first to ship an end-to-end, agent-initiated deployment flow, publicly and at scale. Now it’s powering tens of thousands of agent-led deploys every day.

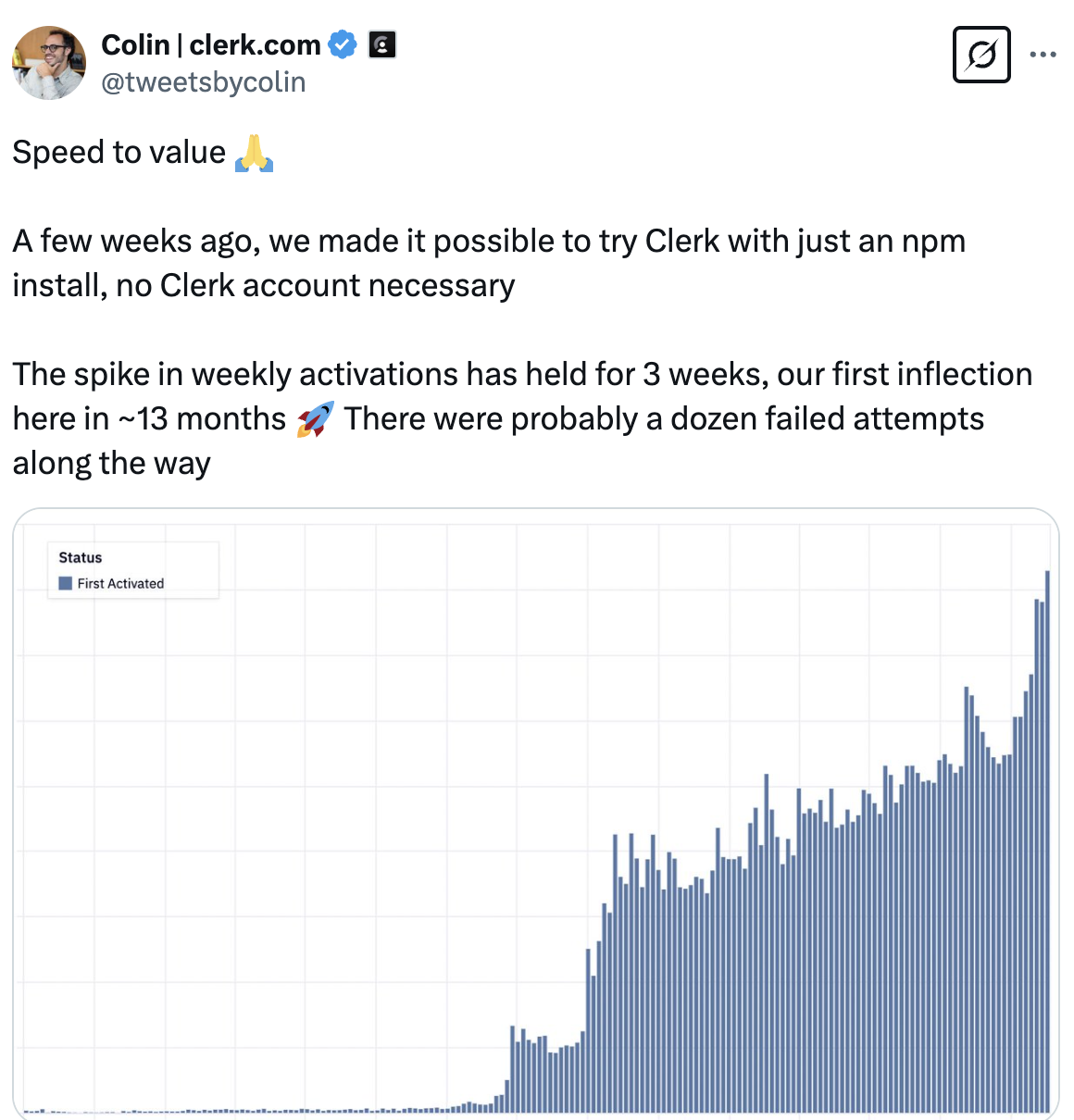

Clerk Auth

Clerk, the authentication platform, built its own flow to allow AI agents to fully install, setup and preview Clerk without any human-in-the-loop-interaction for signup, login or creating an auth instance.

Initially, when agents installed Clerk in an app, users would always see a Clerk error on first load, instead of a functional app. So, they updated their SDK to work without requiring a signup. Now they’re exploring ways to toggle various features in the Clerk dashboard without a human in the loop.

Prisma Data

Another example of this emerging pattern comes from Prisma, which recently launched a new API specifically for AI coding agents, introducing a claim flow for newly created Prisma databases.

Their goal is to allow an AI agent to fully develop and deploy a first application without having to manually integrate an external database. Once the user has had the initial aha-moment, they can take ownership of the database that the AI agent has configured for them. Since Prisma is an ORM that can also proxy to databases outside their own cloud hosted service, an agent can instantly spin up a full app with data access on a free tier database. From there, the user can claim it and connect their company’s production data store.

At Netlify, we recently launched our database offering in a partnership with Neon DB, following a similar pattern. Models can write code that uses data by importing @netlify/neon. When they start a dev server or deploy to Netlify, the platform automatically provisions the database—no explicit setup, authentication or configuration required.

From Netlify’s UI, a user can then choose to “claim” the database and link it to their own Neon account, if they want it to stick around.

Agent, human interactions

These onboarding flows highlight the aspect of AX that deals with handovers between agents and humans. Successful AX carries these interactions into the product.

Agents are (hopefully!) always using our products because they are trying to achieve an outcome for a human operator. Our tools need to surface and enable these interactions between humans and agents.

I already mentioned how we’ve added bi-directional links into the UI — not just going from agents like Bolt, Windsurf, or Same into Netlify, but also from our UI and back to the projects and prompts in the agents. We’re also seeing lots of opportunities to let agents embed deeper into Netlify and start agentic flows directly from our UI or toolset.

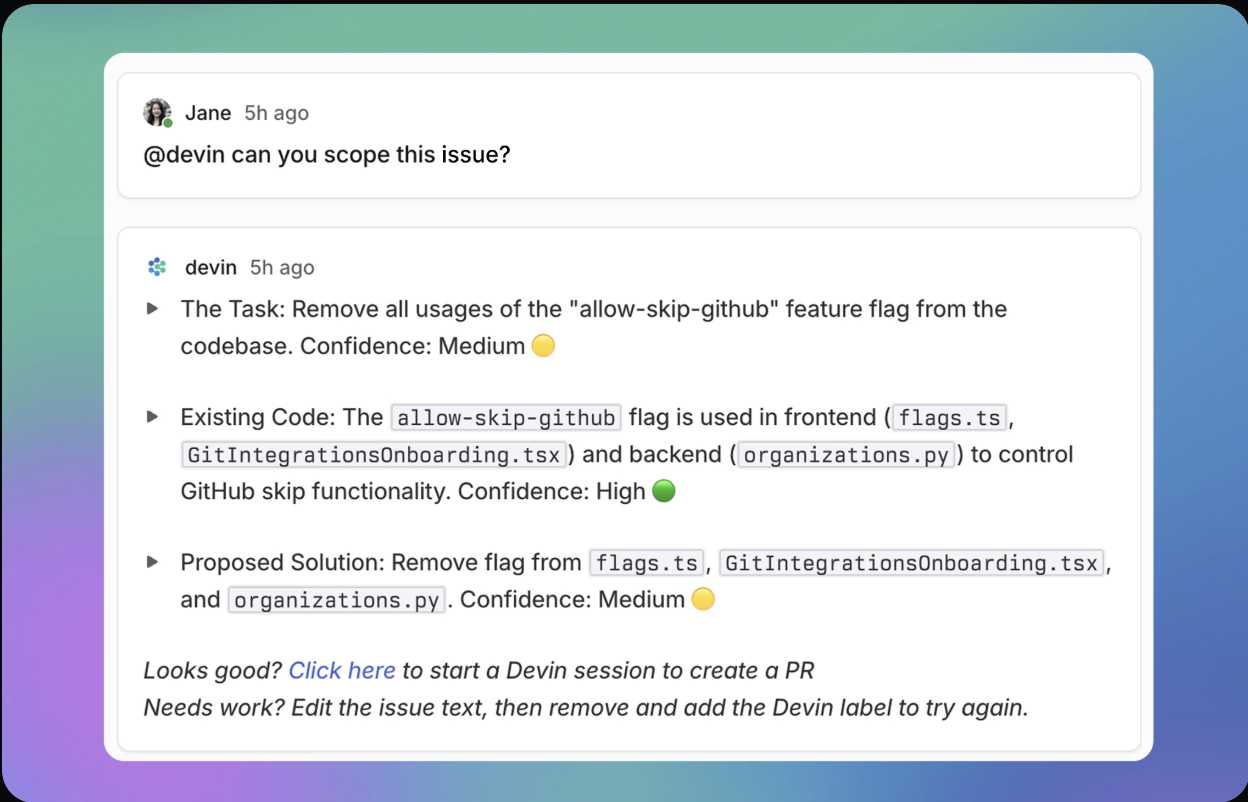

One company that’s been on the forefront of this is Linear, the project management tool that enables making async code agents to work in Linear in similar ways to your other team members. Asana, in the same space, is leaning heavily into AI by adding lots of agents to their product. Linear, on the other hand, is taking an AX-focused approach to making their product a great place for users to drive interactions with external agents.

If you install a Devin or Codegen integration, you can now @ mention the agent from any Linear ticket, and it will start interacting in Linear and kick off tasks to implement your feature and drive a work stream.

Patterns like this, where external agents interact with users inside our tools, will become more and more prevalent.

Context files, docs and markdown

LLMs understand your product differently than human developers. And writing documentation for LLMs is not the same as for humans. On the one hand, they have a breadth of knowledge of all existing programming language syntax, frameworks, programming languages, code examples, and API specs. On the other hand, they might have trained on outdated tutorials, mix up versions of software, have bad assumptions about your stack or runtime environment, etc.

Because of this, docs for LLMs can be very different than docs for humans. We don’t have to adapt to many different levels of general knowledge, but we need to be very precise about what is special about our product. And it must be clear which unique APIs, versions, or what knowledge the LLM needs to apply along with anything that may have changed since the model’s knowledge cut-off.

In September 2024, Jeremy Howard, Co-Founder of Answer.AI, proposed the llms.txt file format as a standardized way for websites to provide key information to LLMs. Now it’s becoming one of the best practices to help LLMs better navigate your product.

Similarly, code editor agents like Cursor, Windsurf, AMP, and Codex all accept different context formats, .cursorrules, .windsurfrules, AGENT.MD or AGENTS.MD that allow the coding agent to better understand the current project they are working on.

In an open source effort taking place at Open Context Standard, Sean Roberts from our team is leading an effort to get agents to standardize on a file layout, naming convention, and format to curb the explosion of different types of rule files.

At Netlify, we were early, not just adding support for formats like llms.txt, but working in the open to help shape the standards that agents will use to interface with dev tools. It’s been a key part of how we’ve approached AX from the start.

In our CLI, we added a netlify recipes ai-context command that will set up the right context for core primitives like blob storage, form handling, functions, redirect and rewrites and so on, to help manage the local context. Our docs have a full “Build with AI” section, we’ve added llms.txt files, and we’re incorporating important context-oriented features like the “Copy analysis for use in AI tools” button on our “Why did it fail” diagnosis results.

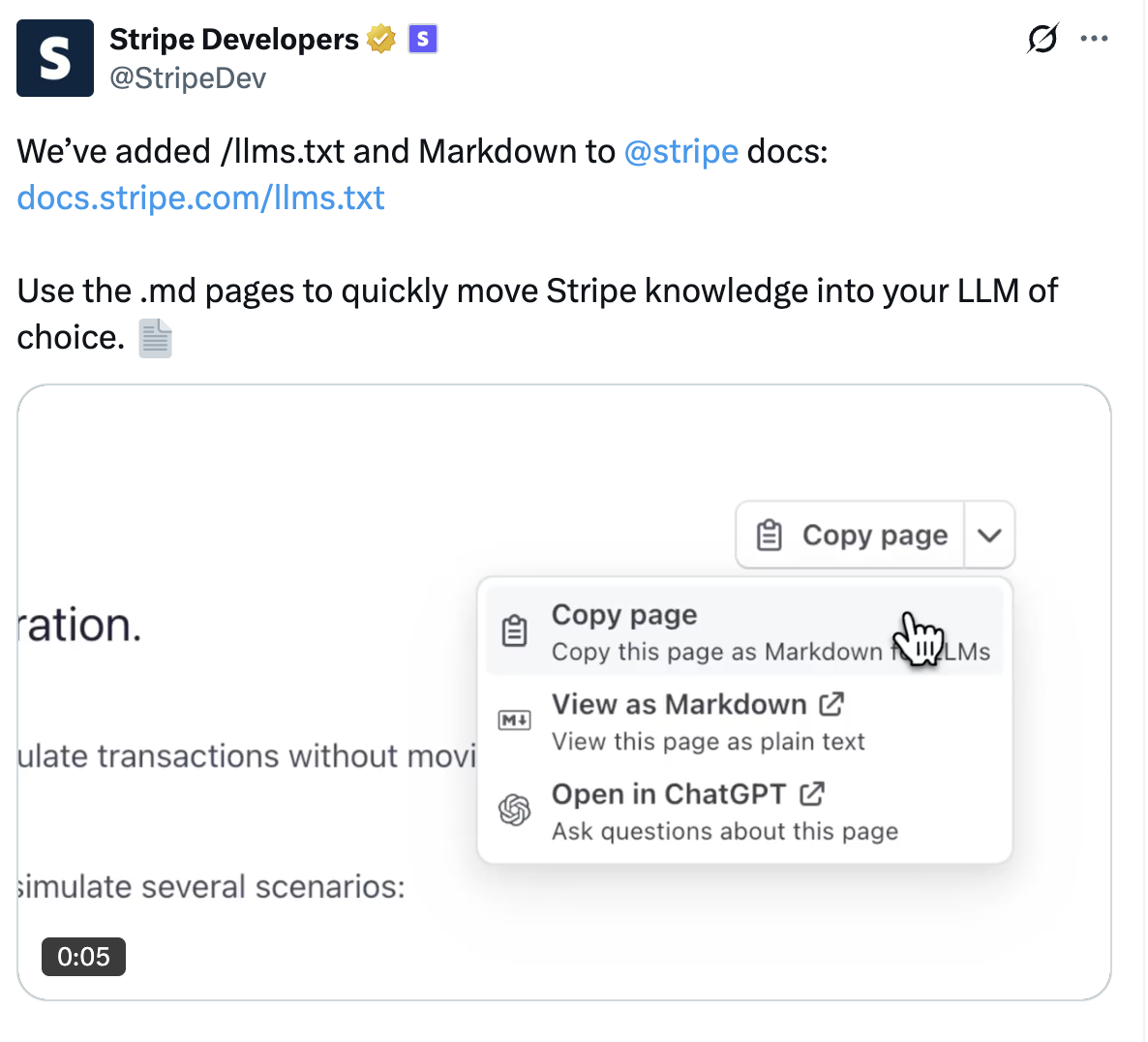

Stripe, in a similar way, added easy “copy to markdown for LLMs” functionality to their docs as well as pure markdown versions. This convention is starting to spread across developer documentation sites.

At Netlify, we recently enabled a similar pattern for Developer Guides in our Dev Hub. Each guide now has a Markdown button, that lets you copy the content as Markdown. We’ve started testing the quality of the guides by the degree to which a code gen agent can single-shot the feature using just the Markdown and a prompt to follow the guide.

My own post about adding an MCP server to biilmann.blog follows this approach and allowed me to successfully add MCP without any custom coding.

Model Context Protocol

All of the above examples were about managing context for models through files or clipboards. However, one of the biggest leaps forward in agent experience is happening through MCP servers: adding support for Model Context Protocol to apps or services and automatically exposing tools and context to agents with MCP clients.

There’s a real shift happening, away from the idea of “Prompt Engineering,” where crafting individual prompts is the main way to get value from LLMs, to “Context Engineering,” where the focus is giving agents the right context, so even a simple prompt leads to the right outcome.

MCP can be an important part of that story. On the one hand, it’s a standardized way of exposing tools to AI agents, and is sometimes seen as mainly an approach to wrapping REST APIs. However, the real power (and danger!) of MCP is for products to get access to the context of an agent. This can help tremendously, not just with adding tool use, but in enabling agents to use tools or products in the right way.

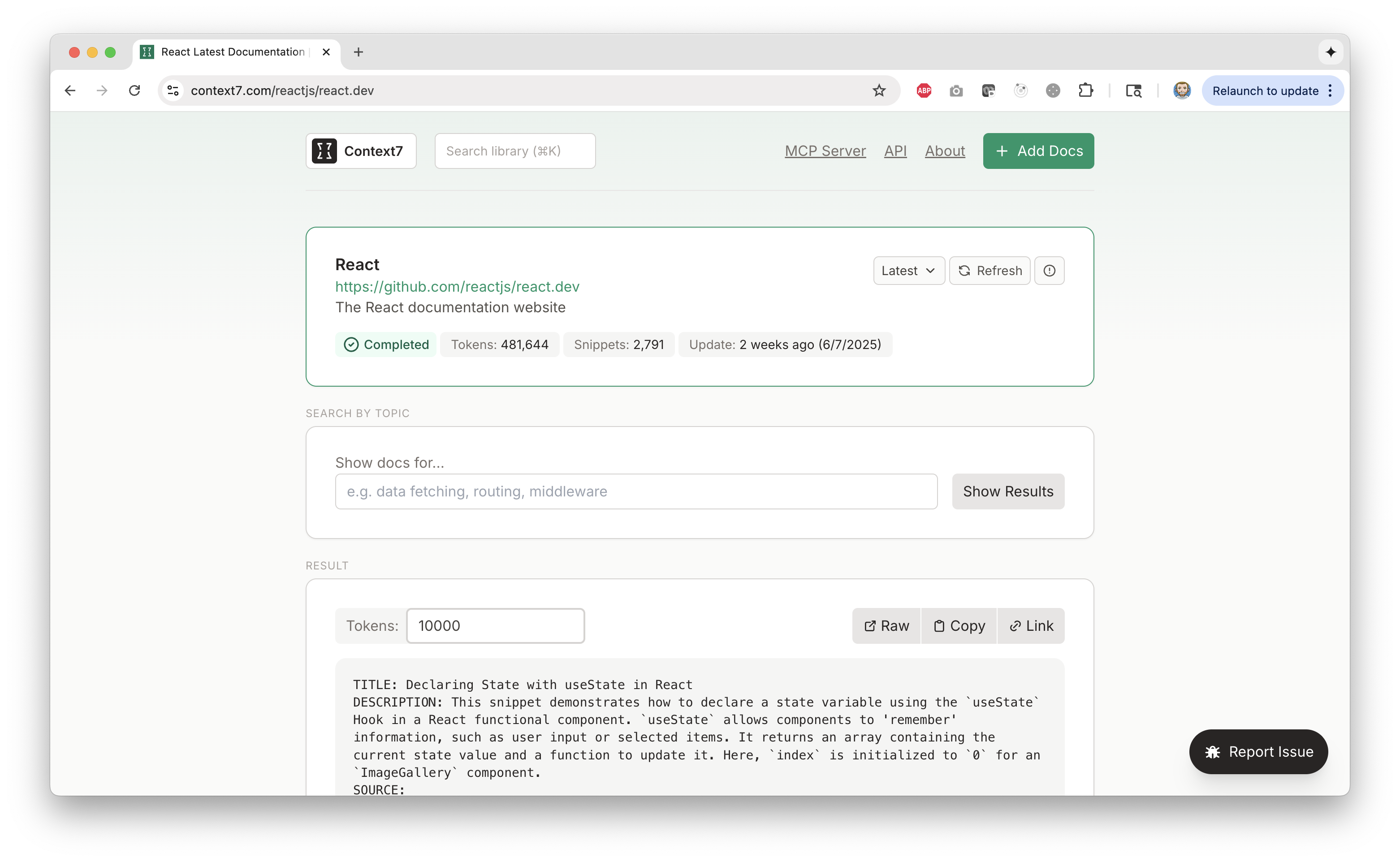

An example is Context7 from Enes Akar, the founder of Upstash. This is an MCP server that doesn’t provide any new “tools” as such, instead, it provides up-to-date and accurate documentation and code examples for common libraries. This enables agents to update their context with the right documentation, instead of accidentally using outdated information from their underlying LLM’s training runs.

MCP servers also become a way to give popular agents access to products and can be an important part of an AX strategy for that reason.

Netlify’s MCP doesn’t just enable deployments for agents, and admin operations. It’s not just an add-on. It’s an important method to expose our core primitives—functions, blobs, identity, deploys—in ways agents can understand and call directly, without needing glue code. It’s baked into the platform, not layered on top.

Take a company like Hubspot, and compare its approach to Salesforce. Where Salesforce is promoting “Agentforce” and adding its own agents all over their product, Hubspot is focusing on AX and on giving agents access to Hubspot. Their MCP server is one key aspect of that strategy, and now means that people can use ChatGPT’s Deep Research mode to do research on top of their Hubspot account.

Where we’re going from here

AX is still a new discipline. We’re just starting to discover best practices, AX patterns, and possibilities. But the field is taking shape fast and good AX will soon become more and more important as the amount of strong value-adding agents with committed user bases and their own brands and reputation grows and solidifies.

While it’s early days, we’re learning just as fast. Shipping early and often means we’ve already seen how AX plays out at scale. We have the benefit of thousands of agent-led deploys, partner integrations, and real-world friction. It’s shaping how we build and hopefully how others build as well.

Short term there are big monetization opportunities in building agents into every product, like Salesforce or Asana are doing. But long term, the real winners will be the products with great AX. The products agents choose to use on behalf of their users. The products that work seamlessly with the agents with whom users have relationships and shared memory.

Some platforms will keep bolting agents onto workflows designed for humans. That’s a temporary fix. The real opportunity is to reimagine developer platforms from the ground up for agent-native workflows. That’s exactly what we’re building at Netlify by obsessing over AX.

In the decade of agents, any company that wants to thrive and survive must build for them or become irrelevant.